In recent years, artificial intelligence has played a prominent role in the area of health.

Its booming use has to fulfil various technical, legal, policy and budgetary criteria.

1. Technical aspects

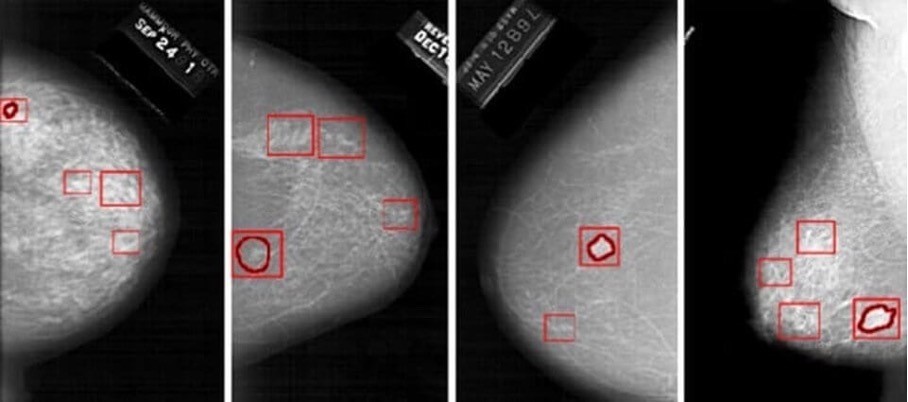

Artificial intelligence (AI) is currently reshaping the world, especially in the area of health. Indeed, it is easily able to detect various types of diseases such as cancers and tumours, even at an early stage of their development.

Models based on AI are being developed based on a very large quantity of healthcare training data, in the form of medical images such as X-rays, CAT scans, MRIs and all other types of imaging results.

Examples of AI detection capacities in medical imaging

- Detection of neurological anomalies

- Detection of common cancers (breast, lung cancer, etc.)

- Diagnosis of kidney and liver infections

- Detection of brain tumours with a high level of accuracy

- AI machine learning for the analysis of dental images

- Detection of bone fractures and musculoskeletal lesions

The risk indicated by the model can lead the doctor to detect traces of cancer at a very advanced stage that would not have been seen with fatigue or overwork or poor-quality images.

It must be noted that the doctor always makes the final decision concerning the patient’s diagnosis. AI provides guidance but never issues an opinion concerning the diagnosis or the measures to be taken.

This legal aspect of AI in healthcare is addressed below

2. Legal aspects

Faced with the fast pace of technological progress in the area of AI and in light of the associated risks in terms of safety and fundamental rights, the European Commission has proposed a much-anticipated legal framework in the form of a draft regulation associated with a coordinated action plan (the Artificial Intelligence Act). The text was submitted in April 2021 but is still under discussion to harmonise the various countries’ points of view. While no date has been set for the moment, the text should be adopted in 2023.

The principles of quality, integrity, safety, robustness, resilience, supervision, explainability, interpretability, transparency, reliability, traceability, human oversight and auditability, which were previously identified in various European texts and have been implemented by developers and publishers by means of more or less efficient governance systems, are addressed and translated into specific obligations for providers starting from the design of an AI system and then throughout its life cycle.

This set of requirements reinforces, in the form of legislation, best practices applicable to the development and monitoring of high-risk AI systems, including the obligations of providers and professional users, and defines conditions of governance as well as penalties for infringing.

In the healthcare sector, given the challenges associated with the use of AI systems as decision-making and diagnostic tools, the relevance and accuracy of AI data shall be guaranteed, requiring that these systems be placed on the market in accordance with the Regulations on medical devices (Regulation (EU) 2017/745) and data protection (Regulation (EU) 2016/679). The validity of a high-risk AI system shall be assessed in terms of its compliance from the design stage and then throughout its life cycle through the establishment, in particular, of an iterative risk management system.

The technical documentation of a high-risk AI system shall be drawn up before that system is placed on the market or put into service and shall be kept up to date. It shall demonstrate that the high-risk AI system complies with the requirements of the Artificial Intelligence Act and shall thus provide notified bodies and competent authorities with all the information necessary to place the system on the market or put it into service.

Machine learning AI systems shall be developed based on high-quality training, validation and testing datasets subject to appropriate data management and governance practices. The Artificial Intelligence Act notes that to the extent that it is strictly necessary for the purposes of ensuring bias monitoring, detection and correction in relation to high-risk AI systems, the providers of such systems may process special categories of personal data, including health data, subject to appropriate safeguards such as pseudonymisation or encryption where anonymisation may significantly affect the purpose pursued (example of clinical investigations). In France, it can still be complicated to access the datasets of

manufacturers with a view to developing AI systems. Similarly, for anonymised datasets, the legal conditions applying to anonymisation need to be specified, i.e. the qualifications of the processors, the applicable legal bases and the conditions of compliance, and the rights and freedoms of the persons concerned.

3. Policy and budgetary aspects

The public authorities have clearly understood the role played by digital technology in the area of health.

They have started developing a 5P (personalised, preventive, predictive, participatory and precision) approach to medicine to benefit citizens, patients and the healthcare system. This approach is based for example on connected objects, service platforms, AI, digital medical devices, digital twins, trial simulators, robotics, and many other devices.

On 29 June 2022, the President of the French Republic presented the over €7.5 billion “Health Innovation” 2030 plan at the Strategic Council of Health Industries (CSIS). The aim is to make France one of Europe’s leading innovative nations in the area of health.

Furthermore, the €650 million “Santé Numérique” acceleration strategy under the “Investissements d’avenir” programme is promoting the development, validation and testing of digital tools for this 5P approach to medicine.

To learn more and read about these financing arrangements, visit the following page: Investissements d’avenir: launch of the “Santé Numérique” acceleration strategy | enseignementsup-recherche.gouv.fr

CONCLUSION:

AI in health is a growing practice that is automating recurring tasks for healthcare professionals so they may have more time to focus on activities that have real added value for patients.

AI and the digitisation of healthcare are also tools that are being used to combat the lack of doctors in certain regions and the decrease in the number of practising physicians.

However, let us make no mistake: the aim is not to replace doctors with machines; rather, it is to organise virtuous interactions between human expertise and AI systems.

Efor, the European leader in life sciences consulting, assists you with the technical and regulatory aspects of the development process for your AI systems.

Our experts will be pleased to provide you with support through the various stages of your product’s development, from its design to its maintenance on the market.

Contact them directly by writing to onedt@efor-group.fr